With a number of attendees having similar ballots, I changed the paradigm a couple years back, adapting a raffle mechanic similar to the one in the boardgame Killer Bunnies and The Quest for the Magic Carrot. In that game, players start with one magic carrot card and work to collect more. At the end of the game, when all the carrots have been found, the true Magic Carrot is revealed and the player with the matching card is the winner.One player may have more than half the carrots, but even a player in the back of the pack with 1-2 carrots to their name still has a chance to win.

I bought a roll of tearaway raffle tickets, and everyone playing gets one, plus another for each successful guess. At the end of the night, we draw to see who wins the modest door prize (typically a night at the movies).

To create even more variety, I am thinking of having an upset bonus, and giving double tickets for any category winner that isn't the favourite. I popped on to a couple of websites over lunch today to do some research and was fascinated by what I found.

I visited the aforementioned GoldDerby and also FiveThirtyEight.com, statistician Nate Silver's blog that had me convinced someone else was going to win the U.S. presidency in 2016 (sigh). Gold Derby has a number of experts they poll, while FiveThirtyEight uses a complex algorithm based on other awards won and their relative correlation to winning an Academy Award. For instance, the winner of BAFTA's Best Picture trophy has won the same Oscar 12/25 times.

Silver's site only looks at the 'major' categories, while GoldDerby levels a prediction for every single award. I copied the winners from both sites onto the printable ballot from Vanity Fair, and was astonished at how few differences there were between the two; I had fully expected to make far greater use of my coloured highlighters:

Of the eight categories that FiveThirtyEight weighed in on, they only differed under Best Picture, and then only barely; they give The Shape of Water a narrow Edge while GoldDerby says it is too close to call between that film and Three Billboards Outside Ebbing, Missouri (although I now see that they have given Three Billboards the Edge since Feb 27). Both sites also see Guillermo del Toro taking home the Best Director trophy for his fishy monster pic.

They agree that Three Billboards will win for Best Actress and Supporting Actor, with Darkest Hour and I, Tonya winning Best Actor and Supporting Actress. They also concur on Coco running away with Best Animated feature.

If you are at all curious, I strongly recommend checking out these sites yourself and taking a look at their rationale, it is fascinating stuff. FiveThirtyEight in particular makes a point about how difficult it is to model a predictive algorithm for something so subjective which also used rank ballots, going to second and third choices from the 'wasted' ballots until they have a winner with a majority of the vote.

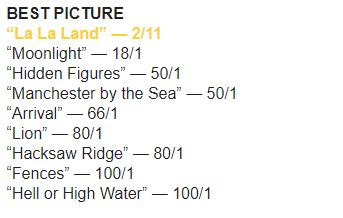

This is no doubt part of the reason that Moonlight came out of nowhere with the win last year, surprising even the announcers and the predicted winners, La La Land:

(Man, I hope no one lost their shirt leveraging their house to win $40K on a 'sure thing', but you know someone probably did...)

Interestingly enough, one site did pick Moonlight to win, and did it by crowdsourcing.

James England's site gets its considerable userbase to vote on movies they've seen using a series of match-ups, which effectively simulates the ranked ballots used by the actual Academy. I did this today, and although a bit more tie consuming than anticipated, breaks the ranking of 9 pictures (plus directors, actors, etc) into far more manageable chunks, similar to a bracketing contest. Similar to last year, England has gone against the grain with his prognostication, although he will continue to update based on user input:

I enjoyed Lady Bird quite a bit, but I am astonished to see it in such a strong position, while Shape of Water and Three Billboards languish in 4th and 5th place, respectively! I was glad to see Get Out, one of my favourites from this year, in second place.

Conventional wisdom says that Academy voters are unlikely to throw a statuette at young, first-time nominees with whole careers ahead of them, and are more likely to award based on a body of work, perhaps Paul Thomas Anderson for The Phantom Thread. After all, Jordan Peele and Greta Gerwig show up as 4th and 5th in his model.

On the other hand though, JamesEng.land has correctly called the Best Picture for the past two years, so who knows? Maybe this third time will be the charm for Lady Bird.

No comments:

Post a Comment